Getting started#

Command line interface#

You can analyse a dataset used the command line interface as follows

python -m mps_motion analyze PointH4A_ChannelBF_VC_Seq0018.nd2

of equivalently

mps-motion analyze PointH4A_ChannelBF_VC_Seq0018.nd2

Here PointH4A_ChannelBF_VC_Seq0018.nd2 is an example file containing cardiac cell data.

Note that in order to read these data you need to also install the mps package.

To see all available options for the cli you can do

python -m mps_motion analyze --help

See Command line interface for more info.

Computing displacement, velocity and strain#

First we need to import the required libraries

from pathlib import Path

import matplotlib.pyplot as plt # For plotting

import mps # Package to load data

import mps_motion # Package for motion analysis

import logging

# Set loglevel to WARNING to not spill the output

mps_motion.set_log_level(logging.WARNING)

Next, let us download to sample data. There is one available dataset here, but you should swap out the paths here to the path to your own data.

path = Path("data.npy")

if not path.is_file():

mps_motion.utils.download_demo_data(path)

Now we will read this file using the cardiac-mps package and print some info about the file

# Load raw data from Nikon images

data = mps.MPS(path)

print(data.info)

{'num_frames': 236, 'dt': np.float64(25.004229346742502), 'time_unit': 'ms', 'um_per_pixel': 1.08615103979884, 'size_x': 613, 'size_y': 145}

Now, we will create an optical flow object which is the object we use to run the motion tracking software. Here we have chosen the Farneback optical flow algorithm.

opt_flow = mps_motion.OpticalFlow(data, flow_algorithm="farneback")

To list available optical flow algorithms you can use

mps_motion.list_optical_flow_algorithm()

['farneback', 'dualtvl1', 'lucas_kanade', 'block_matching']

Before we can run the motion analysis we need to estimate a suitable reference frame. We can do this by first estimate the velocity (let us use a spacing of 5)

v = opt_flow.get_velocities(spacing=5)

print(v)

VectorFrameSequence((613, 145, 231, 2), dx=1.08615103979884, scale=1.0)

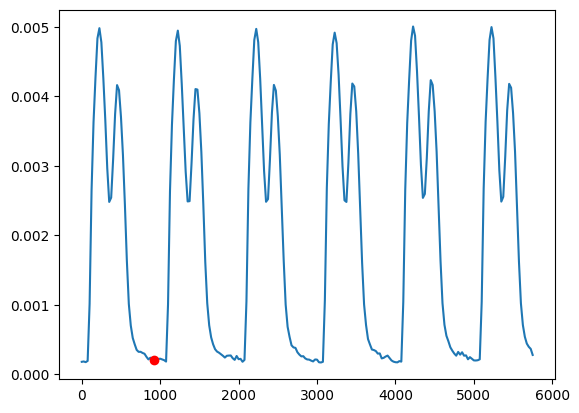

Let us compute the norm and use an algorithm for estimated the reference frame. This algorithm will use the the zero velocity baseline a find a frame where the velocity is zero. We must also provide the time stamps with the same length as the velocity trace

v_norm = v.norm().mean().compute()

reference_frame_index = mps_motion.motion_tracking.estimate_referece_image_from_velocity(

t=data.time_stamps[:-5],

v=v_norm,

)

reference_frame = data.time_stamps[reference_frame_index]

print(f"Found reference frame at index {reference_frame_index} and time {reference_frame:.2f}")

Found reference frame at index 37 and time 925.11

Let us also plot the velocity trace and mark the point where the reference frame is chosen

fig, ax = plt.subplots()

ax.plot(data.time_stamps[:-5], v_norm)

ax.plot([reference_frame], [v_norm[reference_frame_index]], "ro")

plt.show()

We can now run the optical flow algorithm to extract the displacements. We will first perform a downsampling of the data to a scalar of 0.4 (i.e 40% of the original size) to make the problem less computational expensive

u = opt_flow.get_displacements(reference_frame=reference_frame, scale=0.6)

print(u)

VectorFrameSequence((367, 87, 236, 2), dx=1.810251732998067, scale=0.6)

We see that the object we get back is a VectorFrameSequence. This is a special object that represents a vector field for each image in the sequence of images, and we see that is has dimension (number of pixels in x \(\times\) number of pixels in x \(\times\) number of time steps \(\times\) 2) where the final two dimensions are the \(x-\) and \(y-\) component of the vectors. If we take the norm of this VectorFrameSequence we get a FrameSequence

u_norm = u.norm()

print(u_norm) # FrameSequence((817, 469, 267), dx=0.8125, scale=0.4)

FrameSequence((367, 87, 236), dx=1.810251732998067, scale=0.6)

Note that the norm here represents the norm at each pixel in the stack of images. To get a proper trace we can for example compute the mean across all the pixels. Note that the arrays we work with here are lazy evaluated (using dask arrays) so we need to also call the .compute method to get some actual results.

# Compute mean of the norm of all pixels

u_norm_mean = u_norm.mean().compute()

Let us also plot the trace

fig, ax = plt.subplots()

ax.plot(data.time_stamps, u_norm_mean)

ax.set_title("Displacement norm")

plt.show()

We can also create a movie with the displacment vectors. Since we now have scaled the displacement we would also need to send in a scale data set for the background images

scaled_data = mps_motion.scaling.resize_data(data, scale=u.scale)

movie_path = Path("motion.mp4")

movie_path.unlink(missing_ok=True)

mps_motion.visu.quiver_video(scaled_data, u, movie_path, step=12, vector_scale=4)

Create quiver video at motion.mp4: 0%| | 0/236 [00:00<?, ?it/s]

Create quiver video at motion.mp4: 50%|█████ | 118/236 [00:00<00:00, 1172.88it/s]

Create quiver video at motion.mp4: 100%|██████████| 236/236 [00:00<00:00, 1176.39it/s]

Create quiver video at motion.mp4: 100%|██████████| 236/236 [00:00<00:00, 1171.53it/s]

2026-02-23 21:42:09,952 [2434] WARNING imageio_ffmpeg: IMAGEIO FFMPEG_WRITER WARNING: input image is not divisible by macro_block_size=16, resizing from (366, 86) to (368, 96) to ensure video compatibility with most codecs and players. To prevent resizing, make your input image divisible by the macro_block_size or set the macro_block_size to 1 (risking incompatibility).

Now we can load the movie

from IPython.display import Video

Video(movie_path)

From the displacement we can also compute several other mechanics features, such as the velocity and strain. This is most conveniently handled by first creating a Mechanics object

mech = mps_motion.Mechanics(u=u, t=data.time_stamps)

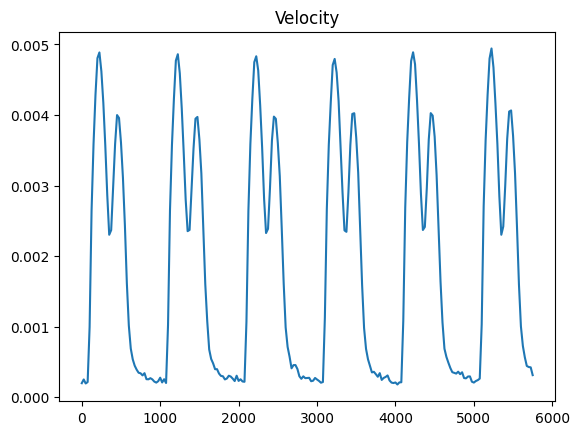

For example we can compute the velocity using a spacing of 5

spacing = 5

v = mech.velocity(spacing=spacing)

v_norm_mean = v.norm().mean()

fig, ax = plt.subplots()

ax.plot(data.time_stamps[:-spacing], v_norm_mean)

ax.set_title("Velocity")

plt.show()

Note that this is different for the velocity trace we computed in the beginning, since here we are compting the velocity from the displacemens (which is also down-scaled) while in the beginning we where computing the velocity directly using the optical flow.

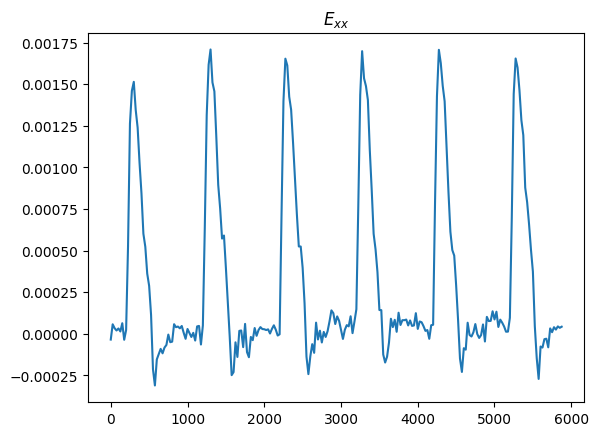

We can also compute the mean value of the \(x\)-component of the Green-Lagrange strain tensor

# Green-Lagrange strain

print(mech.E) # TensorFrameSequence((817, 469, 267, 2, 2), dx=0.8125, scale=0.4)

# Plot the X-component of the Green-Lagrange strain

fig, ax = plt.subplots()

ax.plot(data.time_stamps, mech.E.x.mean().compute())

ax.set_title("$E_{xx}$")

plt.show()

TensorFrameSequence((367, 87, 236, 2, 2), dx=1.810251732998067, scale=0.6)